Every major search engine works in a very similar way, following three basic processes to find content and then return it in search results:

- Crawling – finding webpages

- Indexing – categorising and storing the pages in a database

- Ranking – identifying the most relevant pages and putting them in a priority order

A brief look at these three areas will help you understand why SEO works, and why certain things are recommended during the optimisation process.

Crawling

Search engines start with a ‘seed’ list of high quality pages, for example, the BBC or the Open Directory. This list will be of sites that are regarded as of substantially high quality, and are also likely to have editors checking the quality of external links.

A ‘crawler‘, ‘spider’ or ‘bot’ is a computer program that automatically retrieves pages, and can identify all of the links on those pages. Crawling the seed list of sites allows search engines to discover all of the external links from them. They can then crawl all of the newly discovered sites, finding new links from those sites, and so on. Eventually, this process will allow discovery of every page that is linked to from another site.

Note that this means that if no-one links to your page (not even you!), search engines will not discover your content without manual intervention.

Crawling is a continuous process involving many thousands of computers. Google spent $5 billion on computer equipment in the second quarter of 2014!

At this stage, search engines will immediately discard certain content:

- Broken pages and errors

- Obvious spam

- Types of content that they don’t include in search results (for example, Google retrieves images, but not videos)

If you create a page, and there are links to it, it is highly likely that Google will store it in its database. But this alone does not mean that Google will necessarily rank your page, or that it will attract visitors.

Indexing

Google crawls in excess of 30 trillion web pages. It is not feasible to ‘search’ this amount of content in the way you might, for instance, search for files on an individual computer. Or, at least, it would take an incredibly long time to do so.

Instead, search engines make a database (called an index) of the content, which involves:

- Filing different types of content (e.g text separately from images)

- Storing certain aspects of the page, including:

- The ‘name’ of the page (the title)

- Textual content

- Links to and from the page

- The URL

- Removing ‘useless’ information such as some punctuation characters and code

- Deciding the basic keywords a page could rank for

It’s at this early stage that the keywords your pages can rank for are determined (although the final order is not set). Your pages will also be given a ‘score’ for each word it uses, based on numerous basic criteria.

Basic on-page SEO techniques will ensure your pages pass the indexing stage and have the opportunity to rank.

Ranking

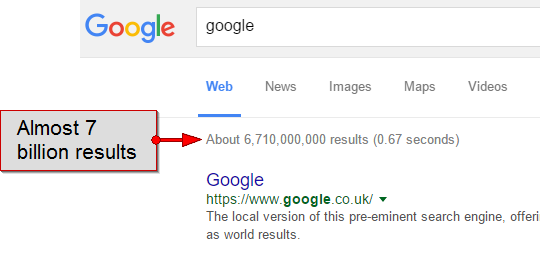

For most searches there are a very large number of results that are a potential match (based on indexing, as described above):

This number is the count of pages matching the word “Google”, according to the indexing process. This is still far too many results to search in a reasonable amount of time. Instead, Google only selects the top 1,000 results, based on indexing scores. At this point, the most basic criteria are still being used, but if you were not in the top 1,000 selected, nothing you do will enable you to rank in Google’s results.

Once it has the top 1,000 results, Google can then perform more refined processing to come up with a final order for the results – the rankings. This process can be conducted very quickly and so more complex criteria can be used.

If you hear discussion of the ‘Google algorithm’ it is usually (and incorrectly) referring to this stage only. Don’t make the mistake of many, and ignore the indexing stage which is equally important.

Ranking criteria are the most complicated and the least understood. These include:

- Interpreting the searcher’s keywords (e.g. correcting errors, or expanding on search words)

- Looking at which external sites link to your page

- Analysis of text content for quality

- Considering the context of a page as part of a website as whole

Google will also use the ranking process to ‘demote’ pages perceived as having issues that should prevent rankings, including:

- Excessive repetition of keywords

- Hiding content

- ‘Thin’ pages with little useful content

- Piracy

- Copies of other pages’ content

Unless you are making serious errors in your content, or are actively trying to manipulate search engine results, you should mainly be concerned with favourable indexing. Complicated ranking criteria are rarely affected by the quality of an individual page’s copy.

![SEO[Thing]](https://seothing.co.uk/wp-content/uploads/2022/11/seothing-logo-dark-small.png)